Ambient AI: From Waiting to Watching

The Evolution of Proactive Intelligence

Ambient AI: From Waiting to Watching

The Evolution of AI Systems

The world of artificial intelligence is on the cusp of a profound shift – from orchestrated systems that execute complex workflows to proactive agents that understand real-world context and act autonomously to assist us. This transition represents not just a technological leap, but a fundamental reimagining of how AI integrates into our daily lives. It presents a rare opportunity to shape the platforms that will underpin the next generation of ambient intelligence.

Looking Back at 2024

The AI landscape in 2024 marked a fundamental transition from reactive chatbots to orchestrated systems. We began the year with chatbots and RAG systems that remained fundamentally reactive—waiting for human queries before springing into action. These systems could pull from knowledge bases and generate impressive responses, but they operated as passive tools requiring explicit commands.

The shift began in earnest with the mid-year release of Claude Sonnet 3.5 and tools like Cursor. These systems introduced agentic coding assistance, allowing developers to treat AI as a collaborative partner rather than a glorified autocomplete. This was our first glimpse of AI taking initiative within constrained environments, though still fundamentally responding to user-initiated tasks.

By late 2024, Sonnet 3.5 v2 and the introduction of standardized tool-calling layers like MCP (Model Context Protocol) crystallized this evolution. Instead of providing isolated coding support, we gave AI equal access to development environments and external systems through well-defined interfaces. The model could now observe context, identify opportunities for improvement, and act directly—but still within the confines of a user-initiated loop.

Notably, 2024 also witnessed a significant shift in what constitutes intellectual property for companies building on top of foundational models. In early 2024, proprietary prompt templates were considered the primary IP—carefully crafted instructions that elicited desired behaviors from models. However, as model capabilities rapidly advanced and release cycles accelerated, the moat shifted toward evaluation frameworks (evals). Companies with sophisticated evaluation systems gained the ability to quickly assess new models, understand their capabilities, and integrate them strategically, creating a significant competitive advantage.

The Limits of Today’s Agentic AI

Despite the breathtaking advances in orchestration capabilities over the past year, today’s most sophisticated AI systems are still fundamentally reactive at their core. They may excel at executing complex, multi-step workflows, but they operate primarily in response to human input, beginning their work only when prompted.

There has emerged a clear delineation between Workflows and Agentic systems, primarily driven by the increase of both scope and autonomy of Agentic systems. The difference between these two architectural patterns is well documented in Anthropic’s blog post ‘Building Effective AI Agents’:

- Workflows are systems where LLMs and tools are orchestrated through predefined code paths.

- Agents, on the other hand, are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks.

While Agentic systems can leverage standardized interfaces like MCP to interact with external environments, databases, and APIs, they remain fundamentally limited by their trigger-response paradigm.

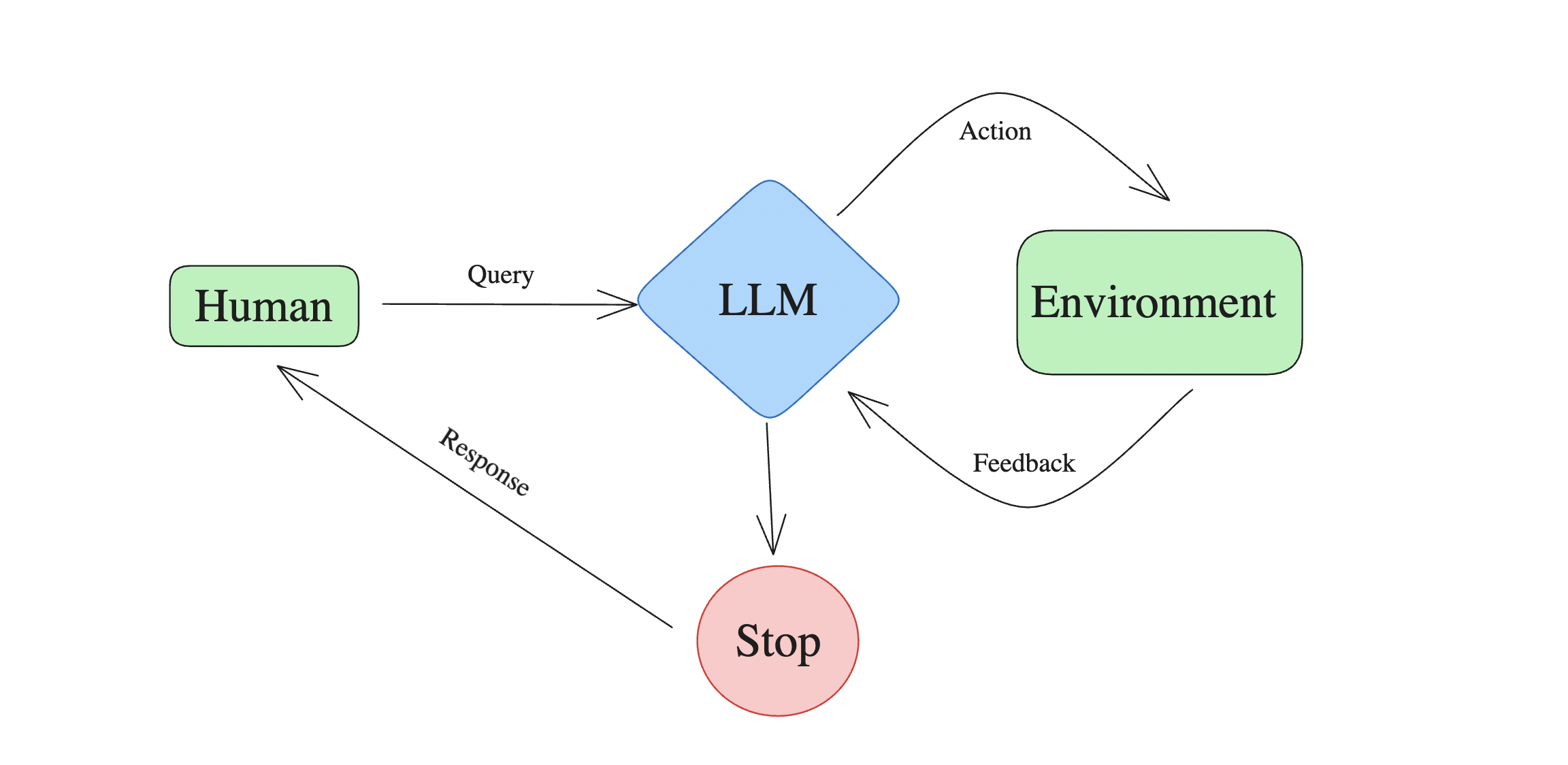

The current Agentic AI paradigm still depends on a human-initiated query to begin the action cycle. Note how the process flow always begins with human input and terminates with either a response to the human or a full stop.

The Promise of Proactive AI

The next frontier of AI is about closing this gap between human and machine initiative. Proactive AI systems will function as always-on, ambient intelligences that can understand context and take action to assist us in real-time.

This isn’t just about more sophisticated tool use—it’s about systems that understand goals, anticipate needs, and act autonomously. Proactive AI systems will maintain persistent awareness of their environment, user patterns, and contextual signals. Instead of waiting for a command to check if your flight is delayed, they’ll monitor travel conditions and proactively adjust your schedule. Rather than responding to code review requests, they’ll identify optimization opportunities and suggest improvements during development.

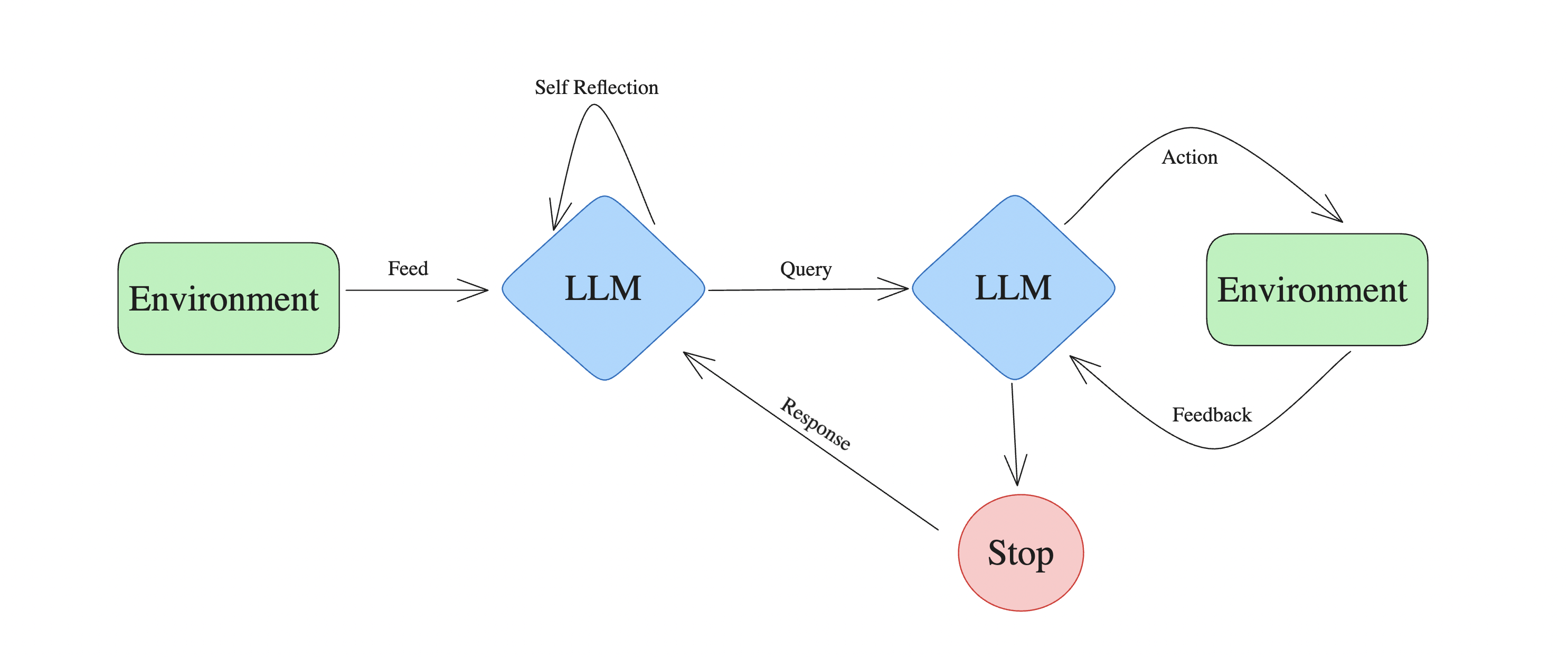

The Proactive AI paradigm introduces a fundamental shift: the environment directly feeds information to the LLM, which can self-reflect, initiate queries, and take actions without human prompting. This represents the crucial evolution from “respond when asked” to “act when needed.”

This transition requires infrastructure beyond standardized tool interfaces. We need continuous context monitoring systems, goal inference engines, and sophisticated state management that persists across interactions. The orchestration layer provides the foundation—reliable tool use, error recovery, and multi-step planning—but proactive behavior demands an additional layer of ambient intelligence.

Building the Infrastructure for Proactive AI

While the proactive AI future is exciting, the reality is that much of the infrastructure needed to support it is still in its infancy. The existing ecosystem of AI tools and platforms is heavily optimized for the orchestration paradigm – executing complex workflows in response to clear commands.

Making the leap to proactive AI will require substantial innovation across the data, software and hardware stack. Rather than focusing on individual AI applications, the more impactful approach is to consider the enabling technologies that will power the entire next wave of proactive experiences.

Here are the key layers of infrastructure that demand our attention:

Continuous Context Monitoring

Proactive AI begins with capturing real-time context – which means a massive scale-up in the systems that connect AI to the physical and digital world.

Generally, this is exciting because it will involve building more specialized systems that react to domain-specific data, specifically high-frequency data. Financial transactions, IoT sensor streams, user interface interactions, and communication metadata all represent rich domains where continuous monitoring can detect patterns and anomalies that warrant proactive intervention.

This presents several challenges that are underexplored—for example, if we are to leverage some version of an LLM for consistent monitoring of real-time feeds of data, then perform query reformulation leveraging the pre-existing Agentic Loop system. This monitoring loop would have to develop the ability to perform time series forecasting as well as potentially fusing with more traditional ML systems like recommendation algorithms.

This also raises questions about the mechanisms for query reformulation. Perhaps this is expressed at first in natural language mimicking how a human may have asked a query of the Agentic Loop, but maybe a more LLM-native approach would yield higher bandwidth communication.

We’ll need advanced context monitoring and state management systems that can maintain persistent awareness of the environment. These platforms must efficiently track user activities, preferences, and patterns across multiple domains while respecting privacy constraints.

For digital data, the key will be building unified context lakes where a user’s real-time information across communication, productivity and smart home applications can be aggregated, processed and made available to fuel proactive AI decision-making. Expect to see the emergence of dedicated context fusion platforms that solve challenges like schema management, real-time indexing and privacy-first processing.

Goal Inference Engines: The Operating System for Proactive AI

Data alone isn’t enough for proactive AI – we need sophisticated systems that can translate context signals into intelligent action without explicit commands. This is where goal inference engines come in – though that’s really just a fancy name for an LLM that has been optimized to solve problems requiring many tools used in novel ways over long time horizons.

What’s fascinating is that nearly all of the alpha in this space for domain-specific tasks is coming from two interconnected areas: evaluation frameworks (evals) and increasingly from reinforcement learning. The core models are getting dramatically better at these general orchestration skills, but the real breakthroughs are happening when organizations build sophisticated evaluation environments that can measure and improve performance in specific domains.

This represents a fundamental reframing of what constitutes an operating system for agentic systems. Instead of just providing a set of APIs and interfaces for tools, the next generation of AI infrastructure will need to include:

- Robust evaluation frameworks that can measure performance across multiple dimensions

- Reinforcement learning pipelines that can fine-tune models based on these evaluations

- State management systems that can persist understanding across multiple interactions

- Causal reasoning engines that can identify meaningful patterns amidst noisy real-world data

Organizations with sophisticated evaluation environments and datasets will be able to directly improve model performance in their specific domains, creating a virtuous cycle where better evals lead to better models, which in turn enables more advanced orchestration and, eventually, proactive capabilities.

Consider how this is already playing out in two domains:

-

Coding assistants like GitHub Copilot have evolved from simple autocomplete tools to systems that can suggest entire functions and implementations. Organizations with extensive codebases and robust testing frameworks can create high-quality evals that test for correctness, security, and performance, allowing them to fine-tune models specifically for their development environments.

-

Financial analysis systems that evaluate model performance against historical market behavior can continuously improve their ability to identify patterns, detect anomalies, and generate insights. The organizations that build the most sophisticated evaluation systems for measuring the quality of these insights will create models that adapt faster to changing market conditions.

The key insight here is that the goal inference engine is not just a component of proactive AI – it’s effectively the operating system that will drive the transition from today’s orchestrated systems to truly autonomous, context-aware agents. By understanding the interdependence between evaluation frameworks, reinforcement learning, and goal-oriented reasoning, we can build systems that don’t just execute commands well but know when and how to act without being asked.

Agent to Agent Communication: Beyond Natural Language

As we evolve from reactive to proactive AI systems, we must acknowledge that the Augmented LLM (as described in Anthropic’s “Building Effective Agents” blog) is just one foundational component of a multi-agent ecosystem. The introduction of Continuous Context Monitoring agents represents a fundamentally different role within this ecosystem - one focused on persistent awareness rather than responsive action.

This raises a crucial question about how these different agent types should communicate with each other. While we could leverage natural language as the communication medium between these systems - having Context Monitoring agents formulate their observations as prompts for Augmented LLMs - this may not be the most effective approach.

Natural language, for all its flexibility, introduces several inefficiencies when used for agent-to-agent communication:

-

Information density: Natural language is inherently sparse compared to more structured data formats. A Context Monitoring agent might need to convey complex pattern recognition across multiple data streams, which would be cumbersome to express in prose.

-

Semantic precision: Even the most advanced LLMs still struggle with semantic ambiguity. When one agent needs to communicate nuanced conditions or triggers to another, natural language leaves room for misinterpretation.

-

Computational overhead: Parsing and generating natural language requires significant processing compared to more direct communication protocols.

Instead, we should explore native agent-to-agent communication protocols that are optimized for the types of information these systems need to exchange. These might include:

- Structured event schemas that can capture contextual transitions with semantic precision

- Vector-based communication that can directly transmit embedding representations of concepts, states, or goals

- Hybrid approaches where key contextual elements are communicated through structured formats while explanatory elements use natural language

What’s particularly interesting is that we’re essentially describing general agent infrastructure primitives. Just as human organizations have developed specialized communication formats beyond natural conversation (diagrams, spreadsheets, standardized forms), our AI systems will likely develop their own optimized ways to communicate intent, context, and action.

This inter-agent communication layer will be crucial for enabling truly proactive AI systems, as it forms the bridge between environmental awareness and purposeful action. The organizations that develop efficient protocols for this communication will have a significant advantage in building coherent, effective multi-agent systems.

The Path Forward

The evolution from orchestration to proactive AI creates distinct opportunities across several technological layers:

Orchestration Infrastructure (Now through 2025)

- Standardized tool-calling protocols like MCP

- Workflow management systems for complex task execution

- Integration middleware connecting LLMs to enterprise systems

- Frameworks for building reliable agentic workflows

Proactive AI Infrastructure (2025 and beyond)

- Continuous context monitoring and state management systems

- Operating systems for agentic AI with integrated evaluation frameworks

- Native agent-to-agent communication protocols

- Ambient intelligence platforms that maintain persistent environmental awareness

The Coming Age of Ambient Intelligence

The transition from orchestrated to proactive AI represents a rare tectonic shift in the technology landscape. As with the rise of mobile and cloud computing, this new era of ambient intelligence will reshape not just the applications we build, but the underlying infrastructure that powers the entire ecosystem.

The key is to look beyond the hype around any single AI demo or product, and instead focus on the foundational technologies that will enable the broader proactive paradigm. By investing time and attention in the core infrastructural layers – continuous context monitoring, goal inference engines, and privacy-preserving architectures – we can help bring transformative proactive experiences to life.

I expect continuous context monitoring will see the earliest breakthroughs, with viable platforms emerging in the near future. These systems will form the foundation upon which truly proactive AI can be built.

The AI revolution is progressing through distinct phases: from reactive responses to orchestrated execution to proactive action. We’re currently mastering orchestration—teaching AI to use tools reliably and execute complex workflows. The next frontier is teaching AI when to act without being asked. The infrastructure enabling this transition from “executing commands well” to “knowing when to act” will define the next era of artificial intelligence.